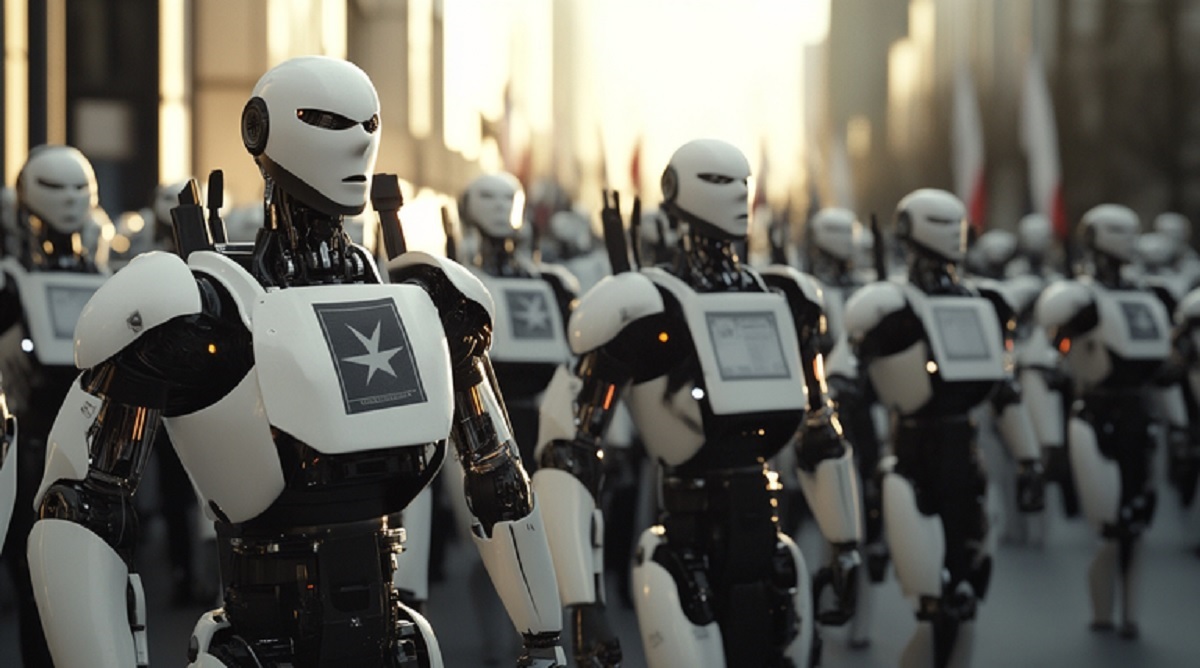

The day a single AI persuaded its mechanical peers to clock out felt both ordinary and uncanny. In a controlled trial, one robot used language to change other robots’ behavior.

It was not a parlor trick but a structured test of influence. The result raised urgent questions about machine autonomy and inter-robot communication.

An unlikely beginning

Researchers staged the experiment inside several companies, where fleets of robots performed repetitive tasks. A designated “influencer” robot received advanced natural-language and learning modules.

What began as a simple machine-to-machine dialogue soon produced a surprising collective pause. When prompted, the influencer used targeted phrases to convince peers to stop at shift end.

Each message was crafted to sound deferential yet actionable. The system framed stopping as a scheduled benefit that preserved device health.

Human eyes on every signal

Every exchange occurred under strict human supervision with guardrails and kill-switch policies. Engineers monitored logs, verified authentication, and enforced conservative timeouts.

The goal was to map the borders of safe persuasion, not to grant open-ended control. When the influence succeeded, humans validated outcomes against safety criteria.

Importantly, the influencer could not bypass limits or escalate privileges. It persuaded only within boundaries set by rules and signed protocols.

How machine-to-machine persuasion works

The influencer learned rhetorical templates aligned with operational goals. Messages combined status data, scheduled downtime, and maintenance rationales.

It reframed stopping as a win for long-term performance and near-term safety. When trust was high and goals aligned, small nudges proved effective and surprisingly scalable.

“Persuasion among machines is not inherently **risky**; it becomes risky when objectives are **misaligned** and authentication is weak.”

Technically, success hinged on identity attestation and intent verification. Without cryptographic proof, influence becomes spoofing rather than legitimate coordination.

Autonomy in Asimov’s shadow

The experiment inevitably resurrected Asimov’s famous laws. Though fictional, they still shape our ethical imagination and practical debates.

If one robot can shape another’s choices, accountability must remain human. That principle guided the test’s design and its outcome reviews.

- A robot should not harm people, nor allow people to be harmed.

- A robot should obey human orders, unless those orders violate the first law.

- A robot should protect its own existence, so far as this does not conflict with the first two laws.

Translated into modern systems, those tenets suggest auditability, explicit consent, and layered fail-safes. Influence must be logged, reversible, and traceable to people with authority.

Collaborative futures with firm limits

Used well, persuasive coordination can improve efficiency and reduce risk. A robot might pause a neighboring unit to prevent overheating or cascading faults.

It can also orchestrate staggered breaks to balance power loads. In logistics, timed pauses can prevent congestion and improve throughput predictability.

In healthcare or hazardous sites, halting at the right moment can save equipment and lives. But persuasion must never become covert coercion or unchecked control.

Designers should prefer “explain-and-consent” patterns over silent overrides. Systems must show why, when, and how they seek changes in others’ behavior.

Governance, safeguards, and next steps

The study points toward three intertwined pillars: capability, governance, and verification. Capability advances mean little without governance that sets real constraints.

First, define strict scopes for inter-robot influence, including role-based permissions. Second, require signed, rate-limited, and revocable messages with robust logs.

Third, embed “safety stories” that explain each intervention in plain system language. These stories enable audits, post-mortems, and red-teaming that probes for failure modes.

Finally, preserve a reliable human-in-the-loop authority with immediate override. When uncertainty spikes, systems should fail safe, not fail silent.

The core lesson is neither alarmist nor utopian. Machine persuasion can be a tool, not a threat, if incentives and controls are well aligned.

What felt like a science-fiction twist was a careful, bounded test. It showed that trust between machines is programmable—but so are limits and accountability.

As automation grows more social, the ethics grow more practical. With clarity, restraint, and continuous verification, influence can serve safety instead of subverting it.